Remember Me: Memory and Forgetting in the Digital Age by Davide Sisto

Medford, Massachusetts. Polity Press. 2021. 155 pages.

Medford, Massachusetts. Polity Press. 2021. 155 pages.

MEMORY AND FORGETTING in the Digital Age is a descriptive subtitle for Italian philosopher Davide Sisto’s book, Remember Me. Early on, the author underlines that he does not subscribe to the common understanding of “memory” and “forgetting” as opposites. Instead, he insists that they are two sides of the same coin: “the very act of remembering implies an act of forgetting.” Memory is not total recall but the fictionalization of the past, which deviates from actual events, even if only in the level of detail it provides or the point of view from which it is recounted. This deviation requires forgetting.

One of the most profound ways in which our society has changed over the last decades is arguably through our use of the internet, social media, and smartphones. This has not only changed how we act and interact but also how we tell stories. In the initial pages of Remember Me, Sisto references Jonathan Gottschall’s beautiful book The Storytelling Animal—Homo fictus—which builds the thesis that humans’ defining feature is that they tell stories. Storytelling not only factors into our identity but is our identity, individual and shared. We live in stories. We are stories. Our ruminating mind’s stream of consciousness is constantly telling us the story of what we think, want, fear; who we were; what we did well or badly and why; and what we could do tomorrow.

Sisto sketches the ways in which technology has changed our memory-making by taking us on a journey that starts with the early days of the internet. It unfolds through many tales of people journaling the progression of terminal illness or a family mourning the murder of their daughter while still receiving pictures on social media that depict the victim with the husband who killed her. The author puts these illustrative examples into the context of novels, short stories, sci-fi, and dystopian movies. The English series Black Mirror, for example, is referenced throughout the book for having made fictionalized predictions of some of what we see today and, perhaps, of some of what is yet to come.

Still, for all the references to fiction and continental philosophy, we see none to psychology, neuro-, or computer science. Even in places where one might see an obvious opportunity to discuss some of the underlying science, little is provided. Despite social media featuring heavily in his 125 pages, Sisto makes no mention of our psychological propensities, heavily studied and exploited by Stanford social scientist B. J. Fogg, that make it so addictive. When discussing transhumanism—a subject deserving of more than the half-page it got—he references Aldous Huxley’s brother, Julian, who popularized the term, but does not explore how the field has evolved since Huxley’s 1957 publication. Likewise, late in his book, he dedicates four pages to the speculative possibility of mind-uploading but fails to discuss any ideas from AI or consciousness research on the topic. Perhaps most regrettably, empirical data is entirely missing from the book. Today “we pretend to live as if we never die,” Sisto writes. But, if that is so, why not discuss empirical studies on how facing death and grief has changed over the last decades in different parts of the world?

Individual anecdotes are of course useful to form hypotheses or to breathe life into what might otherwise be pure numbers. Fiction and philosophy still have an important part to play in how we understand our world. However, their role has changed from what it once was. Sisto’s often poignant accounts and wide literary and cinematic references do not make up for the fact that when discussing the psychological and societal effects of our increasingly digital world, we can do better than qualitative arguments aided by personal and third-person anecdotes.

Lastly, Sisto’s closing chapter, entitled “Conclusion,” will disappoint the reader who might expect to find there a thesis unifying the many voices and points made in the three previous chapters. Instead, it leaves us with the advice that “it is worth organizing a digital will” and suggests how to go about it. Calling for “a scrupulous and widespread Death Education, combined with a rational management of our multiple digital I’s” may be warranted, but it only goes a little way toward ordering and merging the author’s central arguments for how memory and forgetting have changed in the digital age.

Felix Haas

Zurich, Switzerland

More by Davide Sisto

Autumn 2021

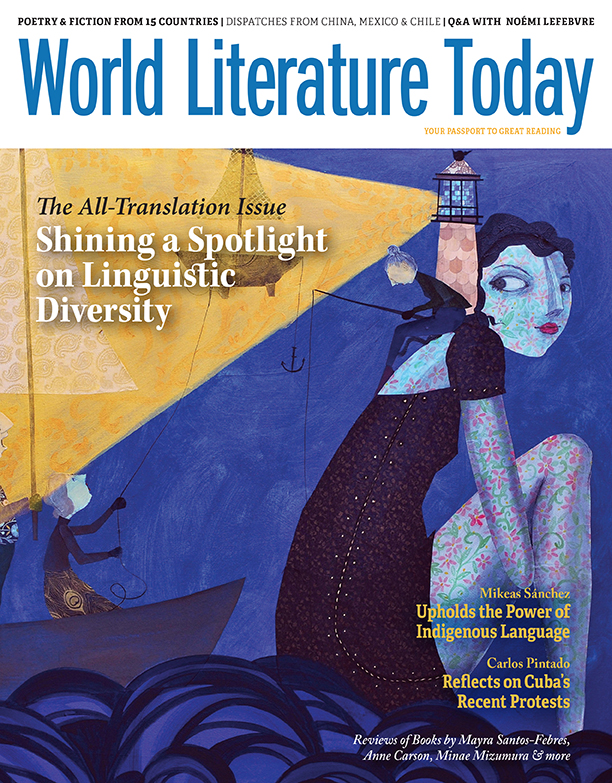

Translation takes the spotlight in WLT’s autumn issue, which—for the first time in its ninety-five-year history—is entirely devoted to the craft that makes world literature possible: every poem, story, essay, interview, and Notebook/Outpost contribution has been translated into English, and the entirety of the book review section is likewise dedicated to translated books.

Table of Contents